I mean, what stage of the S curve is this?

It’s been a tough week for the AI haters; not only because of Ghibli Day (if you aren’t in the loop, congratulations for touching grass, but also open any Twitter feed and start scrolling) but also since Dwarkesh’s new book The Scaling Era is now out on Stripe Press, chronicling the oral history of AI so far. And the overall vibe this week got me thinking about an old series of essays I wrote at Social Capital - the Scarcity and Abundance series.

Those old Scarcity and Abundance posts were actually two different series I wrote at Social Capital, one in 2016 called “Emergent Layers” that iterated on The Innovator’s Dilemma, and a second in 2017 about positive feedback loops and Red Queen’s Races in environments of abundance. I think they hold up, 8 years later. They describe 2025 pretty darn well.

I’m gonna revisit them today. Now that we’re solidly in Agent S-curve somewhere - and you should read Dwarkesh and others for how that happened, not me - how do we start sorting out what resulting behaviour is actually new, what will continue to feel forced, and what’s next up that’s non-obvious? Three themes stood out to me, on reread, as plausible narratives for the next few years:

Affirming the idea of “light versus heavy businesses”, and how we think about software businesses and their profit potential

The eclipse of “Code as Capital” by a new metaphor that’s closer to “Code as Labour”

Agents that execute work outside of firms are more interesting to me than those that execute work within firms.

Let’s go!

Understanding Abundance

It’s been 8 years, so let’s do a refresher on the two series I’m talking about:

2017: Emergent Layers

2018: Understanding Abundance

The two series each tell a part of the story of how you get virtuous cycles that drive the cost of some critical input lower by orders of magnitude - Moore’s Law, Network Connectivity, and now the cost of reasoning and inference. In each case, consumers violently adopt a new S-curve when they are both overserved by what exists already (and like the new thing because it’s cheaper), and also underserved by the existing solution (and like the new thing because it’s better).

Real S curves are pulled forward by market demand that violently appears, almost as if out of thin air. But at the beginning of the cycle, customers are jaded and “overserved” by existing products and incumbent businesses. Their value propositions are complex, and the customer has to consider many different factors in selecting a product. These are not the kind of starting points from which you get explosive new growth; something new has to happen.

The spark that ignites an adoption curve is when a technological unlock (“newly abundant resource”) suddenly offers end users a new kind of product, sometimes a single killer feature, which is “disruptive” in the sense that it focuses on a single, newly practical value proposition compared to incumbents and ignores the “legacy” checkboxes. The decision to use the product is a quick “Yes or No” decision, not a check box ordeal.

A virtuous cycle kicks off where increasingly diverse, highly specified solutions both a) pull infrastructure forward, and b) make consumer choice even easier for product adoption (you can just try things, and it’s a yes/no decision as to whether you want to or not.)

A new “n of 1” company emerges for each of these cycles (approximately every 6 years?), that captures the scarce resource. It is not a mystery who the n of 1 company is. Everybody buys their stock, and they make a gazillion dollars.

The interesting action lies in what people do with the newly abundant resource. It’s where the new behaviour is that really defines the era. For example, in a previous era of the web where Google was the n of 1 company, all of the interesting action was in Adwords and online advertising, where a new kind of “underserved customer” emerged with basically infinite demand to put the product do useful work, in markets like travel and ecommerce.

Legacy companies don’t suddenly wither; they remain profitable for a long time, and are often put forth as “beneficiaries” of the new trend. But their product offerings can feel forced; the new tech compels them to overserve their customers even more, rather than deliver something truly new. (i.e. “now your email is sorted by AI relevance, and not by date!”) While it’s tempting to judge the new technology by these annoying intrusions, they are not the main story.

With that recap in place, we can pencil out the current state of software and AI-driven product roadmap development onto four quadrants: 1) the “Legacy” action that feels forced and tired, 2) the newly abundant resource that’s suddenly too cheap to meter, 3) the customers who can’t get enough of it, and 4) Nvidia.

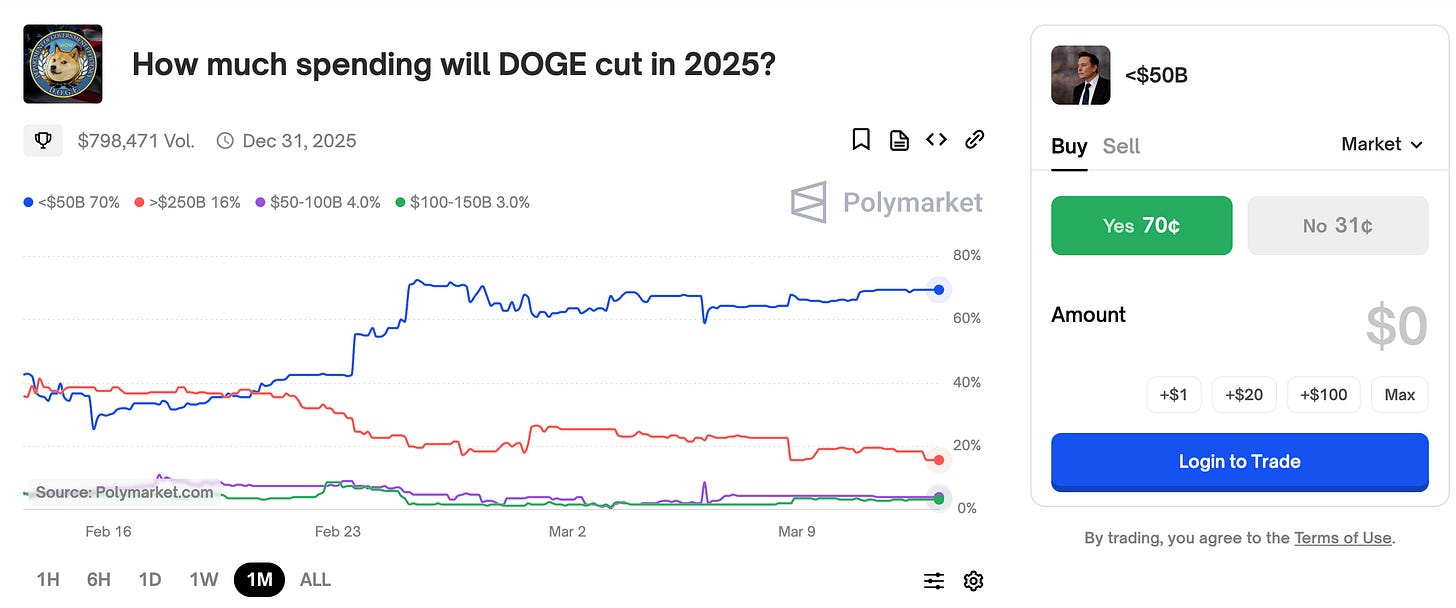

Profit potential and the Red Queen’s Race

The first point to make here is to just ask the question: in these two quadrants where all of the action is happening, who is going to actually make money over the long run? There’s no doubt that real value is getting created for customers, but it’s less obvious which kinds of businesses are poised to capture defensible margin. Switching costs are just really, really low - which is, again, one of the bona fide hallmarks of the abundance cycle that I wrote about years ago. There are some whispers this week (that I won’t link to, because they’re just rumours) of various prominent AI startups being not-so-truthful about their revenue growth, which I think says something about the general mood: not that people are cheating, but that people are skeptical about the durability of any of these businesses.

AI today is a Red Queen’s Race all right: where you have to keep running faster and faster just in order to keep in place. The result is a harder challenge for both the pointy businesses (to stay ahead of consumer expectations) and the utility businesses (to keep deploying capital at immense scale, into things like data centres) to actually capture much of the value they’re creating, yet a hard cycle for either of them to escape.

(From 2017)

Amjad Masad of Replit, one of the shining stars of the Newly Abundant quadrant, shared on TBPN why even quick revenue is no guarantee of upward success anymore: the switching cost is so low between these different (and all excellent) products that practically all of the immense value being created is ending up as consumer surplus. Which is a great thing! Good luck picking winners on a valuation-adjusted basis, though.

(While we’re here, can we give a shout out to the Technology Brothers for creating such a great franchise, in such a short period of time? I’ve never seen anything like it. Hats off.)

We’re seeing the best example yet of “pointy businesses and utility businesses” being driven by a relentless cycle of specialization and differentiation, underlying infrastructure pull-forward, and consumer choice getting easier and easier. Amjad, understandably, talks about having to deliver “the whole burger” for customers as a way to remain sticky and differentiated. I expect the accelerating treadmill to keep accelerating for some time - I’ve never seen a Red Queen’s Race like this.

Peak “Code as Capital”?

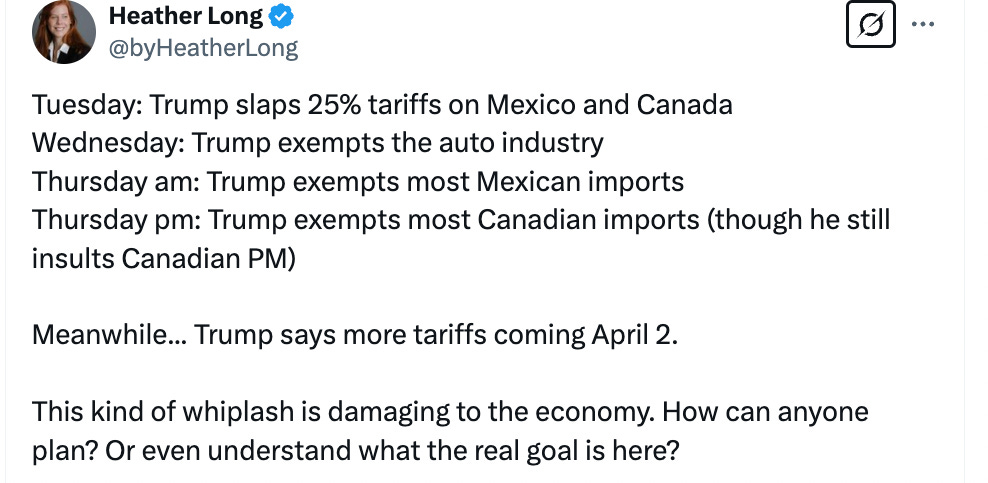

Meanwhile, if we refocus our attention to the companies that already were big and advantaged, we see another classic “Scarcity and Abundance” theme play out predictably: the idea of the overserved customer getting even more overserved as a compulsive response from the incumbents.

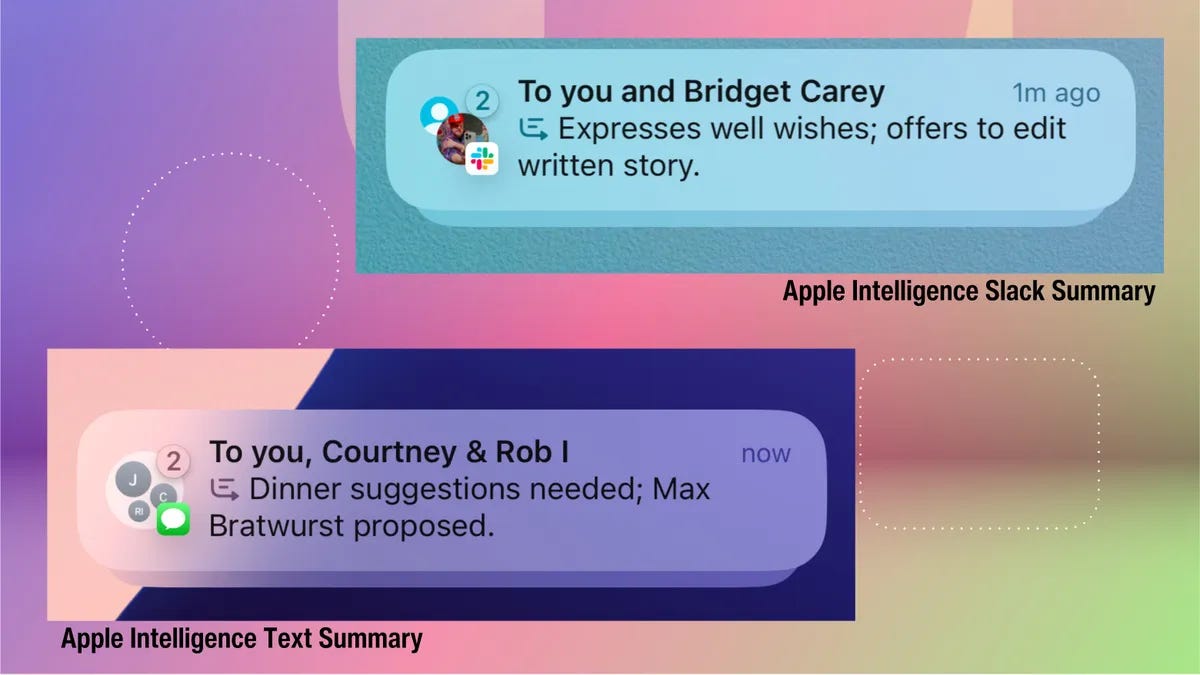

From CNET

Very early on in technological S-curves, a narrative is often put forth that incumbents stand to benefit the most from this change - “this isn’t disruptive, it’s sustaining!” This was notably true for AI. There was a pretty conventional take, not long ago, that went: “AI is all about data, which the big companies have. And it’s going to be all about making existing products more contextually useful, which the big companies are best positioned to exploit.”

That’s not really what happened though, is it. The most beloved new products - ChatGPT, Claude, Cursor, Replit - are all startups. To the degree that tech giants’ versions do get adopted, as far as I can tell this is only from distribution advantages and not from any product advantage. Furthermore, (with some exceptions, like NotebookLM), the big tech giants with all our user data, all of the “contextual advantage” that was supposedly so important, compulsively ship a lot of annoying, forced features that no one really wants. (To their credit, the big tech giants have other pots on the stove that are way more beloved and successful, like Llama.)

Knowing all of our user context has not yet been the advantage people thought it was. Users are perfectly happy, it turns out, to bring the context they care about to the product, and then use the product as a focused tool to accomplish a specific task. For all the ink spilled about AI as an “orchestration tool” that knows my context and saves me time, more of the actual AI uptake has been focused on doing the end tasks. I expect that to continue.

If you try to put your finger on what exactly distinguishes the products that feel magical from those that feel like slop, you’ll notice something interesting. The pointless incumbent products - Genmoji or Text Summaries from Apple, “contextual” anything from Google and Microsoft, that kind of stuff - express an ethos of maximizing the value of a software asset of some kind. There’s a whiff of attitude where “the codebase is the capital”, and the point of all these AI tools is to keep drilling for undiscovered value in the asset.

Contrast this to everywhere I see people ravenously using new tools, like Cursor for coding, OpenAI Operator, or even fairly “basic” uses like lawyers using NotebookLM to summarize case documents in a way they can listen to in the car. There is no concept of an “asset” here; the value of the product to the user does not really depend on rich context, network effects, or some other obvious software incumbency. The software is just doing work, and the work is tangibly value-additive, even if it requires some human supervision.

It might be a bit too flippant to say, “We’re evolving from a mindset where the codebase is capital (the past few decades of software) and into a mindset where code is labor.” But this is a blog post, so it suits the medium. And it suits today’s energy: new projects and startups are writing a lot more code on the basis of “does this make me money now” (what Simon Wardley would’ve once called “worth-driven development”): the codebase is more like a workforce to be trained than like a factory line to be architected. You still want to put thought in it, but it’s a different kind of thoughtfulness. And you expect profit generation out of it a lot more aggressively than with patient capital deployment.

If you’ve gotten this far and wondered why the logo I put in the “underserved customers” box is Y Combinator, this is why - because new startups are arguably the most overserved by the existing paradigm of “your codebase is your capital.” As a new company, you don’t need, or want, “capital” as such! You want signal and growth, and if that can be accomplished by “labor” rather than by capital, all the better, if the numbers work. It’s no great mystery why the current batch of YC companies is earning more revenue than any other cohort, by far - it’s almost like a manufacturer suddenly discovering an ultra-low-wage and reasonably highly skilled workforce available for hire. You’re not going to overcapitalize this opportunity; you’re just going to put your foot on the gas and go.

This why I think it’s cute and possibly helpful to think of this period right now as Peak ‘Code as Capital.’ The true capital asset is becoming the big models and the chips; things you can finance, things you can rent. Going forward, we likely won’t romanticize individual companies or projects’ codebases as The Asset to the same degree, and I think is a double-edged but interesting development.

The Ronald Coase Theory of Agents

The last idea I have here is likely going to be the controversial one. I’m actually above-average skeptical about the ability of AI agents to radically increase “useful work done” inside of big companies - but in contrast, I think there’ll be wild and unpredictable new value created in the interstitial space between companies. Let me explain:

We talked about Coase’s Theory of the Firm the other day, and I keep thinking back to it as an old idea with fresh relevance here. If you don’t recall Coase’s work, “The Nature of the Firm”, it goes like this:

Some kinds of economic activity gets naturally organized inside firms, whereas other kinds get naturally organized between firms.

Markets generally work well, and strict classical economics would suggest that most productive work, over time, ought to be conducted by free economic agents and settled by market mechanisms.

But this doesn’t always happen. There are some kinds of costs - the costs of searching for information, the costs of protecting trade secrets, the costs of coordinating complex sequences of events - that are more easily born within hierarchical companies than by free markets. Hence, “the boundary of a firm” spontaneously forms at the interface between work that economically makes sense to in-source versus outsource.

There’s one additional element here: the “O-Ring theory of productivity”. The O-Ring theory (named after the Challenger space shuttle disaster, where the failure of a single part ultimately doomed the flight) is about national economic development, but applies well to firms too: it argues that productive output is ultimately limited by the weakest link in a chain of economic activity, which in complex systems can be hard to identify. This idea fits really well with Coase. It says, look, firms naturally tend to in-source work that is highly susceptible to the O-Ring problem - the work that is complex and really value-additive.

Ultimately, the valuable work done inside companies is the work where human accountability is the primary bulwark against the O-ring problem. It acknowledges that complex decisions and accepted constraints need to have human owners, and that relationships between humans (notably, but obviously not exclusively, in reporting relationships) are ultimately the valuable stuff that companies are made of.

Okay, so, what does this have to do with AI agents? Well, my suspicion is as follows. One, combinations of AI and Human work could be notably vulnerable to the O-Ring problem. Their utility in executing a chain of tasks is only as valuable as the weakest link, very obviously. (If there’s one hallucinatory or badly-interfaced step anywhere that isn’t caught, you have to throw out the whole thing.) Firms have self-selected for solving O-Ring problems, over decades. That’s just what firms are.

So I think you could construct a bear thesis that the closer a workflow is to core to of an average business, the less suitable agents are to help, for reasons that are readily explained by Coase’s theory to the firm. Maybe not for the very best companies, who truly master their internal agents, but for the average company to whom AI Agents doing real work is essentially a kind of outsourcing. I’m not quite as pessimistic as that, but I agree with Tyler Cowen’s post that any hope for 10% GDP growth or whatever to emerge out of existing firms (which, even in a very disruptive environment, make up most of GDP) is too hopeful.

This sounds pessimistic about the value that can be added by agents, but I’m actually arguing the opposite is true - the most interesting new kinds of work are at the “periphery”. Work that takes place out in the market, where there’s plenty of skin in the game, and more importantly, that the economy has already selected, via Coase’s theory, as an environment where the lower-trust, higher-agency, direct-consequences kind of transactions were naturally sorted. The world of commerce and trading, payments, insurance, capital markets, what have you - these are all areas that have already evolved heavy lines of defence in dealing with unexpected surprise (and the antifragility to exploit it). AI interoperability is moving ahead by leaps and bounds with Model Context Protocol, which is an important step for an emergent layer of work from independent agent composability. If you want to talk “Emergent Layers” and look at what’s next, this is where I’d spend my time.

And, of course, (I think you may see where this is going) - we have an excellent playground all set up where you can speed run all of those experiments to find out which ones work:

We’re seeing real traction on the idea that “stablecoins are the natural way that AI agents are going to make payments to each other”, but there’s another way to think about the opportunity here that I wrote about in the Abundance series in 2017, which is that cheap blockspace is the natural place where independent agents are going to write state. This is the radically interesting thing: the fact that we now have cheap public state and execution environments (like Solana and Base) allows AI agents (call them “onchain agents”, if you like) to just be independent, stateless, functional actors that “can just do things”. Some of the most important APIs for agents are going to be ones like Thirdweb, where agents can just use a traditional Web 2.0 api to read and write to shared, neutral state (blockspace) without needing their own RPC or anything. The result is an additional layer of composability that augments MCP, since there’s a valid, permissionless, shared state to work with.

From Thirdweb MCP Server 0.1 Beta

The end result looks like a virtuous abundance cycle where on one side (the right side, below) you have onchain agents that are able to just do things and be stateless actors (because actual state, like account balances and what have you, is just stored onchain in a neutral third place outside the boundaries of any company.) And on the left hand side, you have consumers of their work, which could be people or frankly could be other agents, but in either case, the decision to consume an agent’s work is a simple yes/no answer: “Is this exactly what I want? Yes/no!”

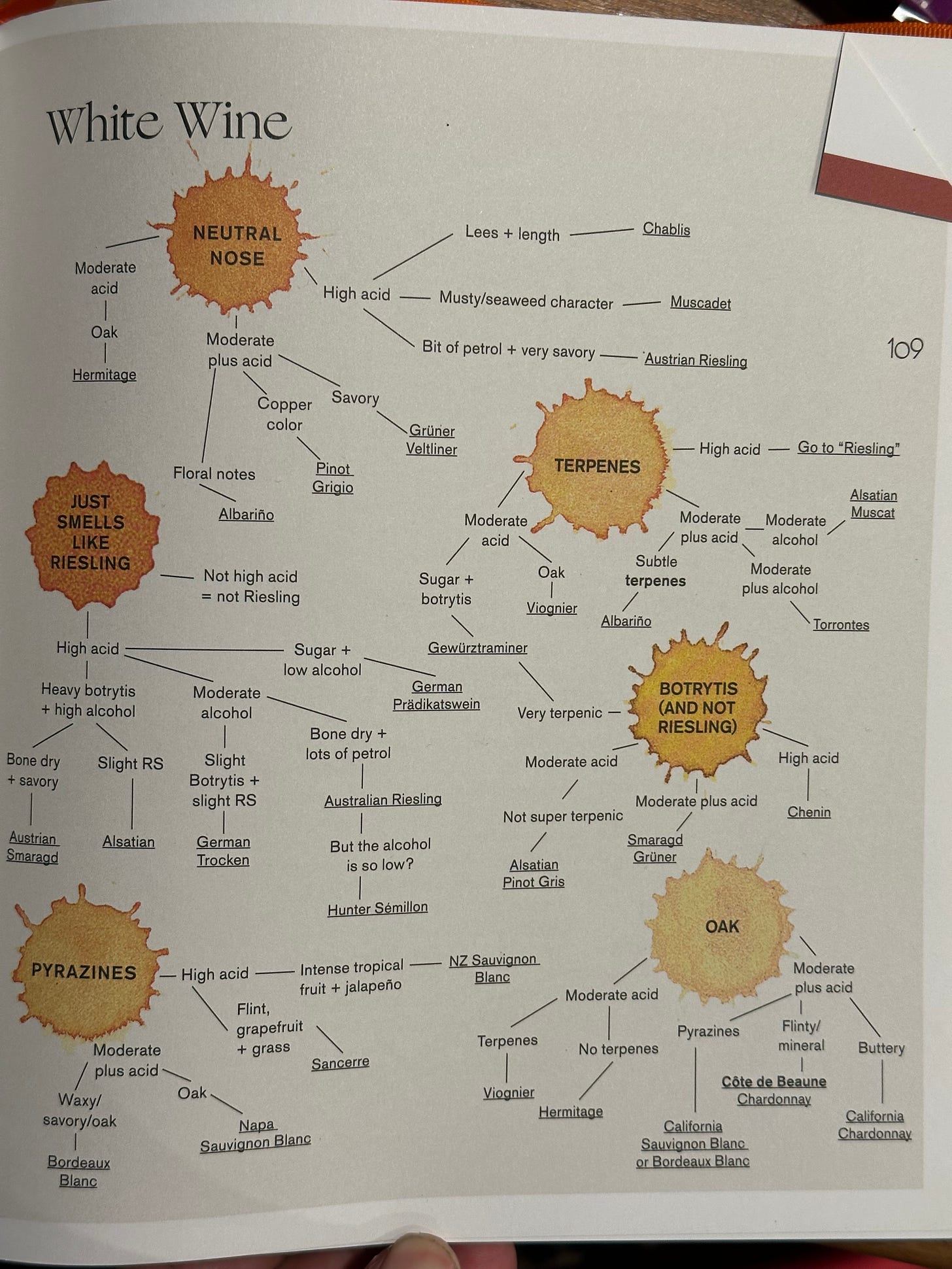

Here’s how I illustrated that idea in 2017:

Back when I wrote this, quite a few people told me it was word salad hallucination, but I distinctly remember one person - who is now a Pretty Important Person in AI - taking the time to email me that the thesis was really insightful. We’re not there yet, but I think we can see it. Can you see it?

I’ll leave it there. Thanks for reading if you got this far, and I wish you a great time playing, building, and joyfully enjoying this strange new abundance in the software world.

If you’ve made it this far: thanks for reading! I’m back writing here for a limited time, while on parental leave from Shopify. For email updates you can subscribe here on Substack, or find an archived copy on alexdanco.com.